When Dr. Donald L. Kirkpatrick wrote his Ph.D. dissertation, “Evaluating Human Relations Programs for Industrial Foreman and Supervisors,” he created the Kirkpatrick Model for evaluating training. He learned the information from industrial-organizational psychologist Dr. Raymond Katzell, then applied it to his supervisory training program in the 1950s.

Trainers around the world use the Kirkpatrick Model, which is the standard for demonstrating the effectiveness of training programs. The latest version is from 2016, when Kirkpatrick’s son Dr. Jim Kirkpatrick and Wendy Kayser Kirkpatrick slightly modified the four levels to fit in better with today’s technology and working environments. Jim’s model is called The New World Kirkpatrick Model.

Before we get into detail about the Kirkpatrick Model, let’s look at some statistics.

Learning and Development (L&D) in the Modern World

L&D is more cross-functional and strategic than ever before. Increasingly, L&D professionals are collaborating with executives to design employee experiences with human needs at the center. Tying “people strategy” with “business strategy” helps create increasingly holistic work environments.

- The top four focus areas of L&D for 2023 were:

- Aligning learning programs with business goals.

- Upskilling employees.

- Creating a culture of learning.

- Improving employee retention.

- 89 percent of L&D pros agree that proactively building employee skills for today and tomorrow will help navigate the evolving future of work.

- 93 percent of organizations are concerned about employee retention.

As you can see, L&D teams understand the importance of high-quality training in matinatining and updating employees’ skills and knowledge. However, modern training is not easy, nor is it inexpensive, and can sometimes be a tough sell. Using evaluation models (like the Kirkpatrick Model) help trainers prove the effectiveness of their training programs to stakeholders.

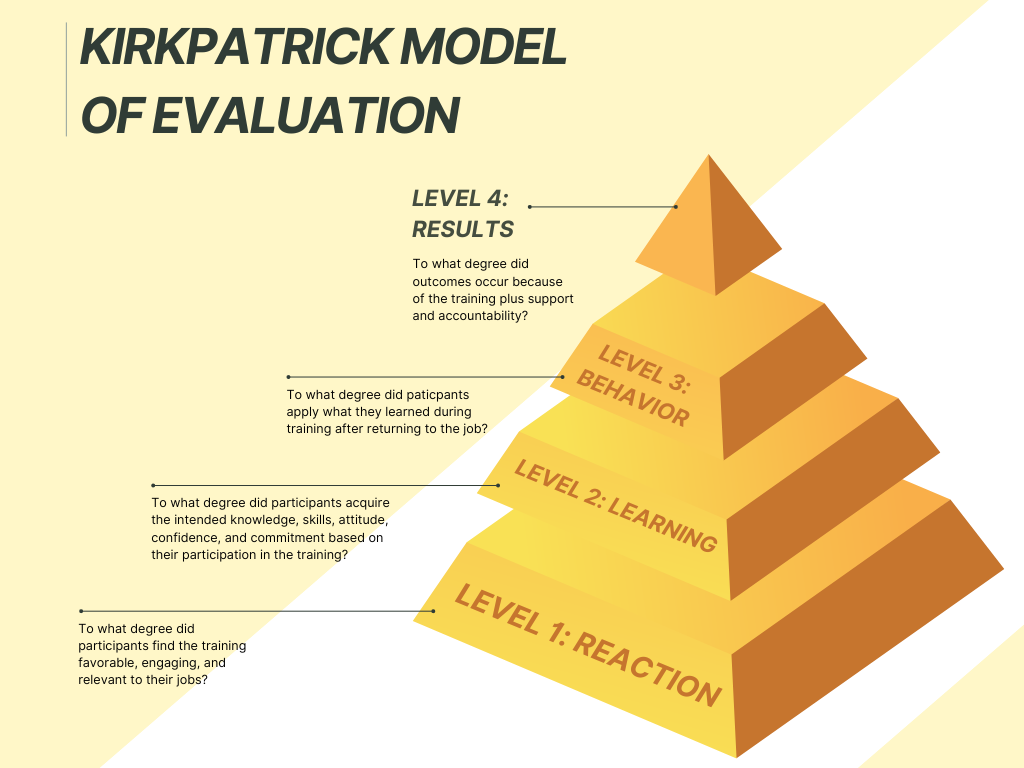

The Kirkpatrick Method uses Four Levels

Level 1: Reaction

Level 1 evaluates to what degree participants find the training favorable, engaging, and relevant to their jobs.

Level 1 is the most common evaluation stage. It requires a trainer to evaluate how learners react to the training by asking thought provoking questions. It’s imperative to determine if participants enjoyed the experience and if they found the material useful. Responses help trainers and instructional designers (IDs) improve training for future use, and evaluators get a sese of how the learners received the program. Assessing training effectiveness at this level provides insight into whether delivery or content requires adjustment.

One caveat: While individual responses help determine how invested learners will be at the next level, it’s a bit one-sided. A positive reaction to the training does not necessarily mean the individual will engage in the next level of training, but a negative reaction almost always means that person will be less likely to pay attention at that level.

Tools and techniques

- Online assessments.

- Interviews.

- Printed/oral reports provided by delegates to the participant’s supervisor(s).

- Post-training survey (aka “smile sheet”) including these questions:

- Were you happy with the educational tools used? (PowerPoint, video, scenarios, assessments, etc.)

- In what ways did you enjoy the training?

- How did you feel after finishing the training?

- What were the biggest strengths and weaknesses of the training?

- Did the training accommodate your personal learning preferences?

- What were the three most important pieces of information you learned from this training?

Caveat

- Comments are subjective and can be skewed by bias regarding the trainer.

Level 2: Learning

Level 2 evaluates the degree to which participants acquire the intended knowledge, skills, attitude, confidence, and commitment based on their participation in the training.

This level gauges how well participants have developed expertise, knowledge, or a particular mindset. Often, evaluations include formal or informal testing, and even self-assessments. If possible, ask individuals to take a pre-test prior to the training, then a post-test of the same information. It’s one of the best ways to determine how much an individual understood the training.

Tools and techniques

Tools and techniques

- Measurement/evaluation should be simple and straightforward no matter the group size.

- Exams and assessments which are given prior to and immediately after training.

- Observations by peers, instructors, and managers.

- A distinct clear scoring process reduces the possibility of inconsistent evaluation reports.

- Survey tools are often used at this level, which compares the intended content of the training with what participants actually learned. Evaluators should ask themselves:

- What new skills/knowledge/attitudes did attendees learn?

- Did the trainees not learn anything that the training intended to teach?

- Did participants truly understand the training?

- Can participants apply the skills/do the task?

Caveat

- Interviewing before and after the assessment is often time-consuming and unreliable.

Level 3: Behavior

Level 3 measures the degree to which participants apply what they learned during training when they are back on the job.

At this level, evaluators analyze differences in the learner’s behavior at work after completing the training. This will help determine if trainees effectively use the knowledge, mindset, or skills from training in the workplace. This tends to offer the truest evaluation of training programs but is also challenging; it is nearly impossible to anticipate when a person uses what they learned from training. Therefore, it’s more difficult to understand when, how often, and how to evaluate participants post-assessment. Due to these variables, it’s best to evaluate this level three to six months after training ended.

Tools and techniques

Tools and techniques

- Surveys and close observation after some time has passed are necessary in evaluating any notable change, the importance of that change, and how long the change will last.

- Incorporate online evaluations into current management and training methods used at your workplace.

- A 360-degree feedback evaluation can help accurately assess an individual’s performance, but it’s best used only after training is completed, not before.

- Develop assessments around applicable scenarios and distinct key efficiency indicators or requirements relevant to that specific job.

- Focus on actionable change and how transferable the training is when the attendee is back to work. Some questions to ask include:

- Are attendees applying the knowledge they learned?

- Are participants using the knowledge and skills they learned?

- How are employees applying their new behavior or skills on the job?

Caveats

- Observations should be made to minimize opinion-based views of the interviewer, as this is too variable and can affect the consistency and dependability of the assessments.

- The opinion of the participant can be too variable, making evaluation unreliable. It’s essential that assessments focus on defined factors (i.e., quantifiable results at work) rather than opinions.

- Self-assessment can be useful, but only with a carefully designed set of guidelines.

Level 4: Results

Level 4 measures the degree to which targeted outcomes occur because of the training package, plus support and accountability.

We have finally reached the primary goal of training: reaching targeted outcomes that correspond with business goals. This level determines the overall success of the training program by measuring factors such as lowered spending, higher return on investment (ROI), improved quality, fewer accidents, more efficient production times, and a higher quantity of sales.

Ironically, while these factors are often the main reason for training from a business standpoint, Level 4 results are rarely considered because they can be problematic to measure. For example, linking results of a training program to improved finances can be difficult.

Tools and techniques

Tools and techniques

- Before training begins, discuss with the learner exactly what will be measured throughout and after the training. This will help them know what to expect.

- Use a control group.

- Allow time to measure and evaluate.

- Especially for the leadership team, allow for yearly evaluations and regular arrangements of key business targets to accurately evaluate business results due to the training program.

- Look holistically at intended goals of a program and determine if the training achieved them.

- What is the result of the training?

- Did employees apply what they learned, or engage in new behavior once back on the job?

Caveat

- Improper observations and an inability to make a connection with training will make it more difficult to see how a training program has made a difference in the workplace.

A Case Study

A healthcare provider implemented a new electronic medical record (EMR system) but experienced inconsistent results. After meeting with stakeholders, the trainers created two outcomes to determine the success of the training program:

- Increased patient safety, particularly regarding incorrect pharmaceutical dosage.

- Reduction in healthcare costs.

After participants finished the training and sufficient time was given to complete all four levels of evaluation, the trainers presented their data to stakeholders.

Results

Level 1: Reaction

- Out of 480 students, 467 were evaluated.

- Students scored the instructors and classroom activities an average of 3.93 out of a possible 4 points.

Level 2: Learning

- Goal: Every student passes the assessment with a score of 100 percent.

- Actual: About 10 percent did not pass with a score of 100.

- Adjustment: Those who did not pass received follow-up training.

Level 3: Behavior

- Goal: At least 90 percent of nurses comply with proper EMR procedures.

- Actual: The average was a little low, at 71.5 percent.

- Adjustment: Leadership began requiring accountability reports from nursing units.

- Re-evaluation: The average shot up to 93 percent compliance.

Level 4: Results

- Patient safety improved: medication errors with a severity level E or higher decreased from 1.5 defects per unit to 0.5 over three years.

- Healthcare costs decreased: by eliminating complications and potential malpractice claims from medication errors, operations costs dropped (the total amount depends upon local malpractice laws).

As you can see from this case study, following the Kirkpatrick Model can help organizations train their teams and show changes in behavior that affect key business goals. One of the most important aspects of this particular case study is that it doesn’t just show successes but also failures. However, the training team used this information to determine how best to increase the effectiveness of their program. (For a thorough understanding of this case study, please read Achieving Patient Safety Using the Kirkpatrick Model by Dr. Jim Kirkpatrick, Wendy Kayser Kirkpatrick, and Linda Hainlen, available for free on the Kirkpatrick Partners website.)

Advantages and Disadvantages of the Kirkpatrick Model

As with any evaluation model, there are both advantages and disadvantages to using Kirkpatrick.

Advantages

There’s a reason Kirkpatrick is so widely used across the world: it helps measure a training program’s success so that trainers and executives understand if the program is effective. Trainers gain insights into their programs’ effectiveness and make data-driven decisions about investment in, and creation of, future training.

- Allows organizations to evaluate various levels of training outcomes.

- Trainees share their feelings about the usefulness and applicability of the training, and if their new knowledge affects their performance.

- Provides a systematic method to assess training programs, helping IDs understand where to make necessary improvements.

Disadvantages

As with any model or approach, Kirkpatrick does have some limitations.

- Kirkpatrick focuses on training and development programs but doesn’t consider other factors that may impact employee performance, such as motivation, leadership style, or organizational culture.

- This model measures the reaction of participants to the training program and outcomes more than actual behavioral changes and business outcomes. (In fact, it is difficult to find a case study that includes a Level 4 evaluation at all.)

While several training evaluation models exist, most organizations rely on Kirkpatrick simply because it’s what they know and its ease of implementation.

Just because Kirkpatrick appears to be the most popular assessment method for training programs, doesn’t mean it’s the correct tool for every company and every situation. Also remember that it’s more successful when used with other tools than by itself. Include other tools (Phillips ROI, Brinkerhoff’s Success Case Method, etc.) to see a more comprehensive picture of your training’s impact.

Final Thoughts

The Kirkpatrick Model is just one of several training evaluation models. While all models have their pros and cons, the key factor is HOW you use them. With the Kirkpatrick Model, you must first have a clear idea of the results stakeholders want to achieve, then work backward to achieve them. That is how you finish all four levels and prove that your training is not only effective in teaching knowledge, skills, and new behaviors, but also helps meet business goals.

As L&D’s influence with executives continues to surge, training is becoming a “must-have,” not a “nice-to-have.” L&D must show its impact and connection to business results to keep growing. Using the Kirkpatrick Model, in conjunction with other tools, helps trainers prove their programs achieve results. When you tie ROI and other goals to specific trainings, obtaining funding and support for future learning solutions significantly increases. That’s something that every L&D department wants to hear.

Related Blogs

Measuring Microlearning Mastery: Metrics That Matter

From Novice to Ninja: Expert Insights for Aspiring Instructional Designers

The Importance of Using Bloom’s Taxonomy When Creating eLearning Courses

Resources

“20 Pros and Cons of Kirkpatrick Model.” Ablison. Accessed 6/27/23. https://www.ablison.com/pros-and-cons-of-kirkpatrick-model

“2023 Workplace Learning Report: Building the agile future.” LinkedIn Learning. Accessed 6/27/23. https://learning.linkedin.com/resources/workplace-learning-report

Andreev, Ivan. “The Kirkpatrick Model.” Valamis. 6/17/23. Accessed 6/27/23. https://www.valamis.com/hub/kirkpatrick-model

Kirkpatrick, Dr. Jim, Wendy Kayser Kirkpatrick, Linda Hainlen. Achieving Patient Safety Using the Kirkpatrick Model. 10/21. Accessed 6/27/23. PDF.

Kurt, Dr. Serhat. “Kirkpatrick Model: Four Levels of Learning Evaluation.” Education Technology. 9/6/18. Accessed 6/27/23. https://educationaltechnology.net/kirkpatrick-model-four-levels-learning-evaluation

“The Kirkpatrick Model.” Kirkpatrick Partners. https://kirkpatrickpartners.com/the-kirkpatrick-model